Part 1: Framing the Problem and Shaping the Solution

At Agemo, we’re on a mission to revolutionise software development through AI. We believe in empowering people to create sophisticated software solutions without needing deep technical expertise. CodeWords, our core product, is a platform that transforms ideas into deployed software, marking our first step toward that ambitious mission. From natural language user prompts, CodeWords plans, builds, tests and deploys fully functional backend functions.

In this blog post, we’ll discuss how our research efforts that make this possible—including neurosymbolic reasoning, reinforcement learning and process-guided search—led us to take on an unexpected summer side quest: tackling the ARC-AGI challenge.

The ARC Challenge

The ARC (Abstraction and Reasoning Corpus) challenge was first introduced by François Chollet, a researcher at Google and the creator of Keras, in his 2019 paper, “On the Measure of Intelligence”. Chollet specifically designed the dataset to test an AI system’s true intelligence, as measured by the ability to perform abstraction, reasoning and problem solving on unseen challenges. In ARC’s 5 years, it has proven itself a rare example of a benchmark that is resistant to being “gamed” by end-to-end trained deep learning models.

While modern AI systems excel at most benchmarks and may superficially appear to perform in-context learning and generalisation, ARC exposes their reliance on memorisation and interpolation. Even state-of-the-art AI systems such as OpenAI’s o1 model fail spectacularly when tested on ARC’s hidden test set.

Earlier this year, ARC caught our attention when Mike Knoop (co-founder, Zapier) teamed up with Chollet to launch a public competition for the ARC benchmark. The dataset consists of a collection of unique tasks, each of which is presented as one or more pairs of example input-output coloured grids and a final input grid, for which the output grid must be predicted.

Most ARC tasks, such as the example shown, are extremely easy for humans to solve. We do so without requiring any knowledge or experience beyond basic visual understanding and what Chollet refers to as Core Knowledge Priors (e.g. objectness, goal-directedness, numbers and counting, basic geometry). While average human performance is estimated around 75%, the current leading submission achieves only 55% and OpenAI’s o1 achieves only 18%.

Why We Joined the Challenge

At Agemo, our research team is constantly working to improve the core intelligence powering CodeWords. Although software may seem pretty far removed from ARC grid puzzles, our focus areas—reasoning, knowledge distillation, reinforcement learning from machine feedback (RLMF), and inference-time optimisations—align closely with the skills required to tackle the ARC-AGI benchmark.

When we recognised that many of the building blocks for a neurosymbolic ARC solver were already in place within our existing system, the overlap between ARC's challenges (inductive reasoning, planning, skill acquisition, compute efficiency) and our research agenda was too compelling to ignore.

Framing the Problem: Beyond Deep Learning and Discrete Search

The first step in tackling ARC is deciding on a broad solution strategy. As François Chollet himself points out, the most promising approaches will merge the deep learning paradigm with discrete program search:

"We have two approaches that have basically no overlap, that are doing quite well. They're very much at two opposite ends of one spectrum. On one end, you have these extremely large banks of millions of vector programs, but very shallow recombination, simplistic recombination. On the other end, you have very simplistic DSLs, 100-200 primitives, but very deep, very sophisticated program search. The solution is going to be somewhere in between. The people who are going to be winning the ARC competition and making the most progress towards near-term AGI are going to be those that manage to merge the deep learning paradigm and the discrete program search paradigm into one elegant way."

This sentiment aligns perfectly with our research thesis at Agemo. We believe that automated software generation won't be solved by purely symbolic program search, nor by end-to-end training of hyperscale deep learning models. The answer lies in a neurosymbolic approach, where a deep learning model (in our case, an LLM) guides a symbolic reasoning engine.

Shaping the Solution: A Flexible, Object-Centric Approach

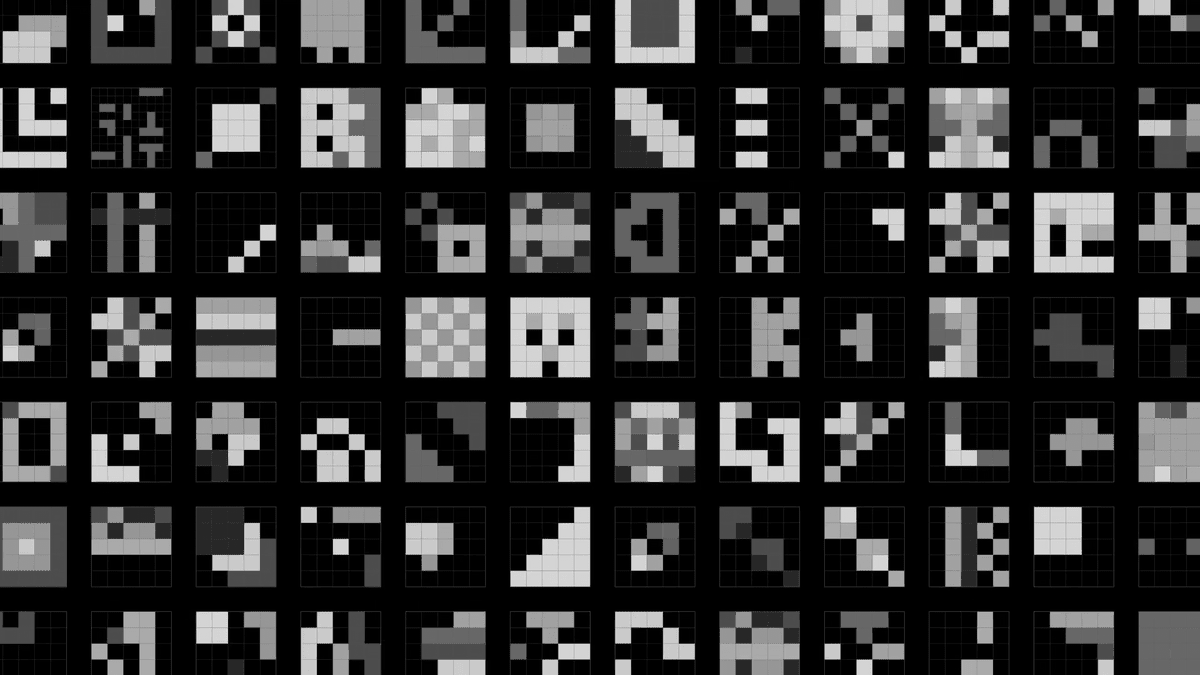

With our broad strategy in place, we needed to define or constrain the space of programs in which our system will search for candidate solutions. Many approaches to ARC involve creating a Domain-Specific Language (DSL) with primitives tailored to solve ARC tasks. Michael Hodel, of the currently leading team, MindsAI, created and open-sourced an impressive ARC DSL containing 160 primitives that were manually crafted over several months in order to compose solutions to the 400 ARC training tasks. Here is an example solution for task 4c5c2cf0, which is shown in the above image:

The function takes an input array and, through repeated function composition from the set of 160 primitives, arrives at a transformation to the corresponding output grid.

While it was tempting to piggy-back on this hard work and adopt an existing DSL like Hodel's, we wanted our solution to demonstrate skill acquisition—learning new primitives on the fly to solve unseen tasks. There's no doubt that performance can be bolstered by defining more and more primitives, thereby encoding more prior knowledge into the system. However, this approach results in a less “intelligent” system according to Chollet's measure (the intelligence is instead that of the engineer who designs all the primitives).

Our preferred approach—just like our approach to software reasoning—is to provide a framework or environment within which we we can train an LLM to reason about the target domain. ARC-specific DSLs like the above, in an effort to explicitly encode extremely domain-specific logic, often become overly restrictive and abstruse. While this isn't a problem for discrete program search systems, our intention is to guide an LLM to generate candidate solutions expressed in a DSL. We're strong believers that to maximise an LLM's chance of success, we should let it use the tools with which it is most familiar — why force an LLM to use a completely novel grammar and syntax when it has already been pre-trained to excel at manipulating 2d arrays using numpy?

Inspired by the "object-centric" approach to modelling ARC tasks of Ferré (2023), we designed a pseudo-DSL based on how humans naturally describe solutions: first by describing the input, then explaining how to generate the output based on the input elements.

Our framework revolves around “grid models” for a given ARC task:

- An input grid model parsimoniously describes the space of valid input grids for that task. It declares the variable and invariable properties of the grids in that task; e.g. the presence of certain shapes or patterns, their colours, sizes, etc.

- An output grid model does the same for output grids, with certain properties declared in relation to the input grid model.

We implemented this using Pydantic models, which provide an elegant way to parse and validate grid arrays.

To demonstrate, let’s consider again the example grids for task 4c5c2cf0. Based on this image, an input grid model for this task should encode the information that valid input grids are all 14x14 in size, have a black background and contain two coloured shapes. In the below example input model, we declare exactly that. The powerful feature is the ability to easily define a class method that will automatically parse a numpy array into an instance of this grid class.

An output grid model can then be used to declare how the corner shape is copied and reflected around the central shape to form a symmetrical pattern.

The transformation logic is encoded in the from_input class method, which can leverage simple numpy manipulation methods (e.g. np.flip) to transform an arbitrary input instance into a predicted output grid.

The framework was carefully designed with our neurosymbolic approach in mind. It strikes a balance—imposing enough constraints to allow us to symbolically verify proposed solutions (testing both input and output grid reconstruction), while not restricting expressivity and limiting the search space of solutions by enforcing a strict DSL.

Our initial set of just 10 core primitives (shapes like Rectangle, Line, and Bitmap, with a couple of useful helper functions, e.g. ShapeExtractor and PatternMatcher) proved surprisingly effective. When we ran v0 of our neurosymbolic solver (using GPT4o) against a random subset of 100 tasks from the public evaluation set, it generated correct solutions for 18% of tasks. When we ran a version of the same solver that uses Hodel’s DSL, it correctly solved 16%.

Looking Ahead: Skill Acquisition and Guided Program Search

While the ARC competition is ongoing, and we'll share more explicit details in future posts, we want to highlight a key feature of our approach: the ability to expand the set of primitives on the fly. Our automated solver encourages the LLM to define new primitives when it can't solve a task with the existing set. Through an iterative process of running our solver and fine-tuning on correct solutions, we were able to generate valid solutions to >85% of ARC training tasks completely autonomously, using a seed set of only 3 hand-written example solutions.

In Part 2 of this series, we’ll dive deeper into the automated solver that we’ve built and explore how the various components work together to tackle unseen tasks. After that, we’ll discuss how we’ve implemented RLMF (Reinforcement Learning from Machine Feedback) in our system, (including hacking vLLM and implementing DPO (Direct Preference Optimization) for vision models), learning from incorrect predictions, and our investigations into inference-time performance improvements, navigating the exploration/exploitation tradeoff using Q-learning.

By pushing ourselves to tackle this fun yet deceptively difficult challenge, we’re not just competing in a benchmark—we’re advancing the core technologies that power our mission to democratise software development through AI.

Stay tuned as we continue to share our journey and insights from our Summer of ARC-AGI!